Today we will talk about the CPU protection from broadcast / multicast traffic on the Extreme X670 platform. These considerations are also correct for the X670V.

X670 family switches are built on the old Trident + and in comparison with classmates – Huawei (S6700-48-EI) or Cisco (N3K-3064) practically dont have control plane protection at all.

Extreme have the built-in to XOS feature – dos-protect. However, this feature will not save you in case of broadcast or multicast storm.

Best practice to protect against BUM traffic, is to use the storm-control or rate-limit flood option in XOS. Here we will also get an unpleasant surprise. The X670 G1 platform uses XOS 16.x, in which the rate-limit flood functionality is implemented using tokens with an update interval of 15.625 microseconds. Without going into details, I’ll say right away – the rate-limit flood option will drop legitimate traffic due to the imperfection of the mechanism.

You can read more on the extremenetworks.com forum.

The described methods have been successfully used as the last border of CPU protection on L2 aggregate switches. However, best practice is protection on access layer.

Theory:

So, it’s time to figure out what traffic is lifted to CPU:

Queue 0 : Broadcast and IPv6 packets

Queue 1 : sFlow packets

Queue 2 : vMAC destined packets (VRRP MAC and ESRP MAC)

Queue 3 : L3 Miss packets (ARP request not resolved) or L2 Miss packets (Software MAC learning)

Queue 4 : Multicast traffic not hitting hardware ipmc table (224.0.0.0/4 normal IP multicast packets neither IGMP nor PIM)

Queue 5 : ARP reply packets or packets destined for switch itself

Queue 6 : IGMP or PIM packets

Queue 7 : Packets whose TOS field is "0xc0" and Ethertype is "0x0800", or STP, EAPS, EDP, OSPF packets When L2 loop occurs, we will get Broadcast, IPV6 and Multicast lifted to CPU.

To dump fraffic hitted CPU and analyze the current situation, you can use this command:

debug packet capture on interface Broadcom count 1000

Dump will be saved in /usr/local/tmp.

You can use tftp or scp to copy dump to local machine for further analysis.

tftp put 11.1.1.1 vr "VR-Default" /usr/local/tmp/2020-02-10_16-54-22_rx_tx.pcap 2020-02-10_16-54-22_rx_tx.pcap

Now we can analyze regular traffic hitting CPU. It is time to build the protection.

Practice:

First, let’s see what will happen with CPU without any protection, if we will generate and flood broadcast and multicast traffic .

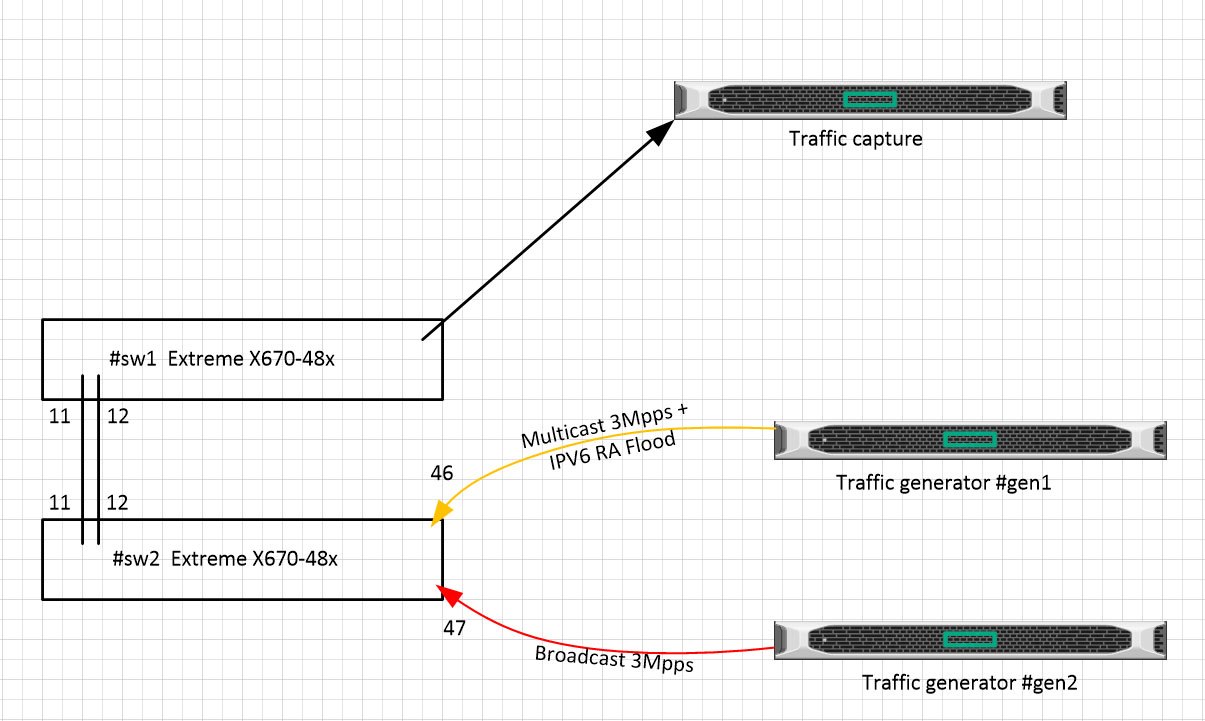

Lab scheme:

Im using pktgetn linux utility to generate BcMc traffic, and nmap script for to generate IPV6 RA flood.

Multicast traffic and IPV6 RA are generated on the gen1 server, the broadcast is generated on the gen2 server.

The average generation rate is 3Mpps.

Started traffic generators, here is CPU usage:

sw2# sh cpu-monitoring | exclude 0.0

CPU Utilization Statistics - Monitored every 5 seconds

-----------------------------------------------------------------------

Process 5 10 30 1 5 30 1 Max Total

secs secs secs min mins mins hour User/System

util util util util util util util util CPU Usage

(%) (%) (%) (%) (%) (%) (%) (%) (secs)

-----------------------------------------------------------------------

System 52.2 50.3 50.4 47.3 48.9 26.0 13.0 69.7 0.32 964.28

mcmgr 39.9 40.4 41.6 43.0 38.6 10.5 5.2 47.1 46.47 356.98

sw2#top

Load average: 6.57 5.38 4.74 4/208 2905

PID PPID USER STAT RSS %MEM CPU %CPU COMMAND

1327 2 root RW 0 0.0 1 41.2 [bcmRX]

1543 1 root R 3852 0.3 0 40.4 ./mcmgr

sw2# debug hal show device port-info system unit 0 | include cpu

MC_PERQ_PKT(0).cpu0 : 1,131,571 +62,271 5,776/s

MC_PERQ_PKT(4).cpu0 : 187,781 +42,768 5,353/s

MC_PERQ_PKT(7).cpu0 : 503 +17

MCQ_DROP_PKT(0).cpu0 : 174,095,428 +29,103,098 3,298,296/s

MCQ_DROP_PKT(4).cpu0 : 78,082,718 +17,834,191 1,021,107/s

sw2# debug hal show congestion

Congestion information for Summit type X670-48x since last query

CPU congestion present: 12414120

Now, with transit broadcast / multicast traffic with about 6Mpps rate, we have 100% CPU load and 10Kpps in the queues on the CPU, everything else is dropped. Real traffic on CPU queues is many times higher.

Now let’s move on to the filters and see what we can do.

To protect Queue 0: Broadcast and IPv6 packets, i will create policy limit-bc-ipv6.

This config can be used if you are NOT using L3 IPv6 features.

configure meter limit-broadcast committed-rate 1000 Pps out-actions drop

sw#show policy limit-bc-ipv6

entry match-v6 {

if match all {

ethernet-type 0x86DD ;

}

then {

deny-cpu ;

}

}

entry match-bc {

if match all {

ethernet-destination-address ff:ff:ff:ff:ff:ff ;

}

then {

meter limit-broadcast ;

}

}

This policy can be applied to all ports, or only to those where a storm is possible.

configure access-list limit-bc-ipv6 ports 1-48 ingress

For a complete simulation, i will create a loop on ports 11 and 12.

sw2# sh ports 11-12,46-47 utilization

Link Utilization Averages Fri Apr 3 14:55:26 2020

Port Link Rx Peak Rx Tx Peak Tx

State pkts/sec pkts/sec pkts/sec pkts/sec

===========================================================================

11 A 7484877 8725834 7483368 8724075

12 A 7471259 8710066 7472785 8711816

46 A 2962834 3716425 7435635 8690736

47 A 2807846 3931673 7304028 8541282

3,000 Broadcast packets are lifted to the CPU; the rest is multicast.

sw2# debug hal show device port-info system unit 0 | include cpu

MC_PERQ_PKT(0).cpu0 : 2,456,584 +290,921 3,043/s

MC_PERQ_PKT(3).cpu0 : 3,104,008 +1,498,923 4,102/s

MC_PERQ_PKT(4).cpu0 : 3,125,047 +394,781 4,102/s

MC_PERQ_PKT(6).cpu0 : 1,222,571 +135,286 4,090/s

CPU utilization is still 100%:

sw2# sh cpu-monitoring

CPU Utilization Statistics - Monitored every 5 seconds

-----------------------------------------------------------------------

Process 5 10 30 1 5 30 1 Max Total

secs secs secs min mins mins hour User/System

util util util util util util util util CPU Usage

(%) (%) (%) (%) (%) (%) (%) (%) (secs)

-----------------------------------------------------------------------

System 50.3 48.9 46.9 51.4 58.1 31.7 15.8 68.8 0.32 1180.54

mcmgr 43.9 43.1 44.8 31.0 6.3 6.3 3.1 48.5 33.33 198.40

Let’s try to protect CPU from multicast.

To do so, you need to turn off igmp snooping on all vlanes that are facing port, where the storm occurs.

If igmp snooping is enabled on the vlan, the traffic will be forwarded to the CPU.

sw2# configure igmp snooping filters per-vlan

sw2#disable igmp snooping vlan test

sw2#disable igmp snooping vlan test2

After disabling igmp snooping on looped vlan, only 3000pps of broadcast hits CPU:

sw2# debug hal show device port-info system unit 0 | include cpu

MC_PERQ_PKT(0).cpu0 : 4,908,231 +6,352 2,994/s

MC_PERQ_PKT(7).cpu0 : 11,214 +3 1/s

CPU utilization ~ 15%.

sw2# sh cpu-monitoring | exclude 0.0

CPU Utilization Statistics - Monitored every 5 seconds

-----------------------------------------------------------------------

Process 5 10 30 1 5 30 1 Max Total

secs secs secs min mins mins hour User/System

util util util util util util util util CPU Usage

(%) (%) (%) (%) (%) (%) (%) (%) (secs)

-----------------------------------------------------------------------

System 9.3 10.5 16.5 13.7 2.7 0.4 0.2 34.1 0.14 18.96

hal 3.5 3.7 3.2 10.2 2.0 0.3 0.1 51.5 1.42 10.67

sw2#top

Mem: 577816K used, 443708K free, 0K shrd, 127032K buff, 157064K cached

CPU: 0.8% usr 16.5% sys 0.0% nic 69.9% idle 0.0% io 2.6% irq 9.9% sirq

Load average: 5.12 2.77 1.10 3/208 2015

PID PPID USER STAT RSS %MEM CPU %CPU COMMAND

1329 2 root SW 0 0.0 1 17.7 [bcmRX]

Summing up, i can say that these switches will be good as L2 aggregators or P routers in MPLS networks. If you are planing to use the X670 as combined L3/L2 switch, protecting CPU will a nightmare.

UPD(02/2021):

In ExtremeXOS 16.2.5-Patch1-22 (Apr 2020) storm-control fix was announced:

xos0063205 Even though the traffic rate is below the configured flood rate limit, traffic is dropped.

But, as usual, it was not completely fixed.

I will configure action = disable-port log for broadcast and multicast with threshold of 500pps and only log for unknown-destmac with same threshold.

configure port 1 rate-limit flood broadcast 500 out-actions log disable-port

configure port 1 rate-limit flood multicast 500 out-actions log disable-port

configure port 1 rate-limit flood unknown-destmac 500 out-actions log

Fired up traffic generator with random DST MAC pattern and 1000pps rate.

The problem is that action = disable-port is triggered when any type of traffic reaches the threshold. In our case, when the unknown-destmac threshold reaches 500pps, the port should not be turned off. However, the port turns off:

sw2#sh ports 1 rate-limit flood out-of-profile refresh

Port Monitor Fri Feb 12 11:48:33 2021

Port Flood Type Status Counter

#-------- ---------------- -------------- ----------

1 Unknown Dest MAC Out of profile 109336

1 Multicast Out of profile 109336

1 Broadcast Out of profile 109336

sw2# sh log

02/12/2021 11:52:14.71 Info:vlan.msgs.portLinkStateDown Port 1 link down

02/12/2021 11:52:14.70 Info:vlan.msgs.FldRateOutActDsblPort Port 1 disabled by Flood Control Rate Limit because the traffic exceeded the configured rate.

Tested on 16.2.5.4 16.2.5.4-patch1-29.

Hopefully this will be fixed in the next releases.